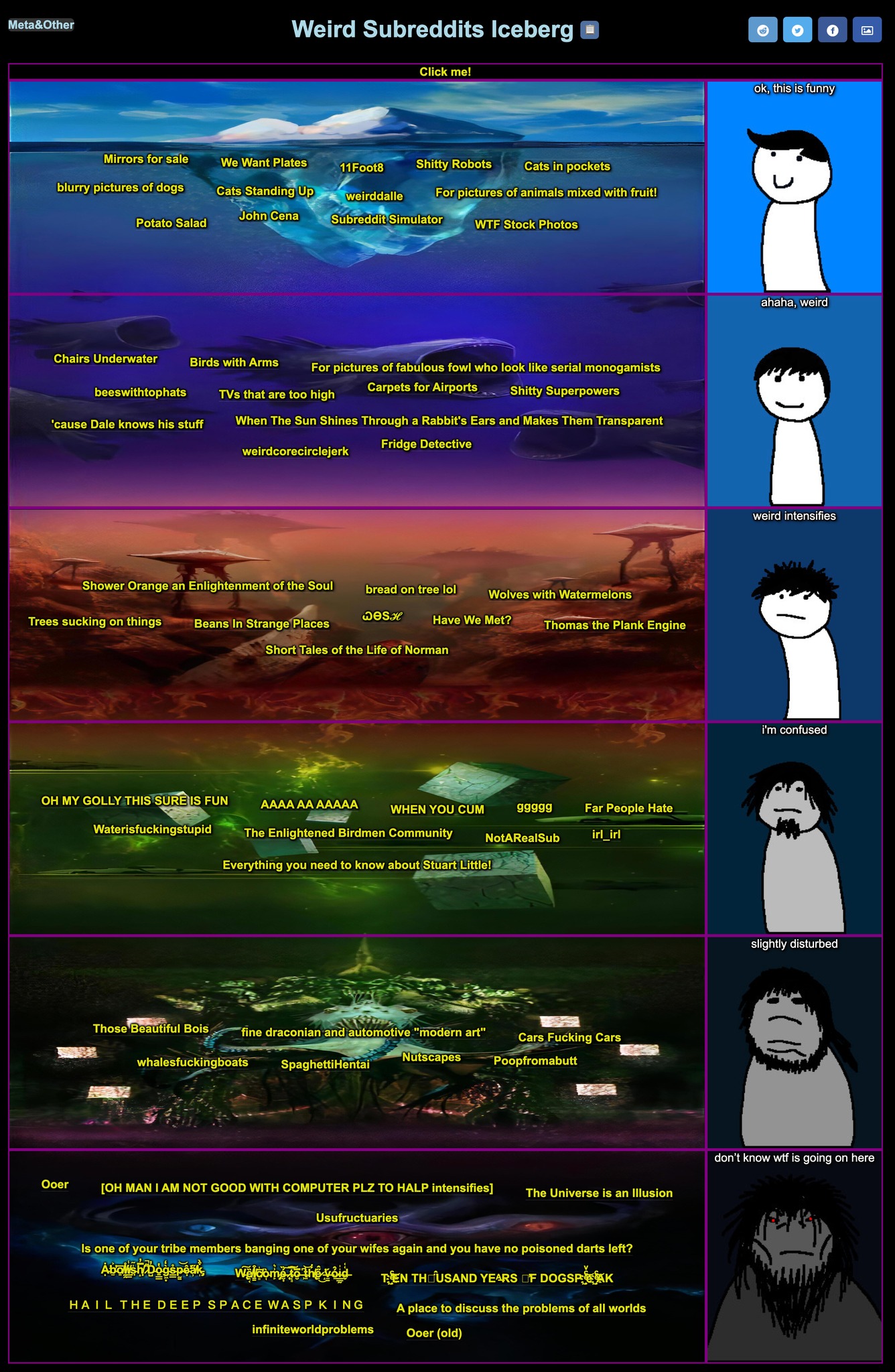

Andrei Kashcha made an impressive website that maps Reddit. It visualizes 116K subreddits and 1.5B comments (Nov 2024-March 2025). An interactive exploration of community connections across the platform. I could literally spend the rest of my life browsing this.