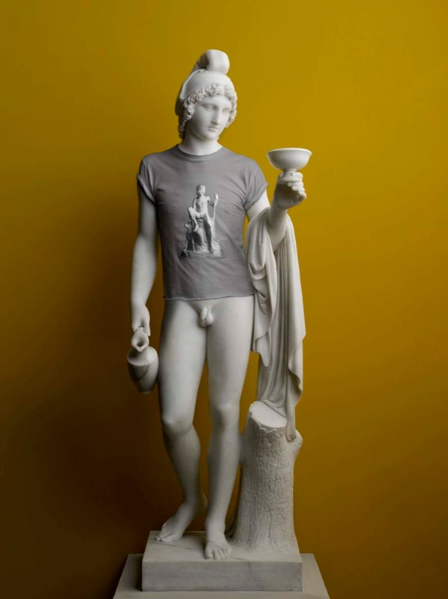

Mercury and Ganymede by Elmgreen & Dragset, 2009…

(via WeWasteTime)

The mission of the HTML11 is to fulfill the promise of XML for applying HTML11 to a wide variety of platforms with proper attention paid to internationalization, accessibility, device-independence, usability and life structuring.

(via Nerdcore)

Works by Jasper Elings:

“…With sources originating from digital readymades or appropriated video, each artist modifies, redirects and redistributes the footage using a wide array of alterations, from simple editing to more detailed and complex reconstructions. The digital realm casts a dark shadow over the initial intent of images and our preconceptions of their meaning and usage into a new alternative mode of existence where the source becomes either a catalyst or an added layer of a whole new work.”

(via i like this art)

(Via Is That All There Is?)

“From cars to CDs, houses to handbags, people are no longer aspiring to own. Belongings which used to be the standard by which to measure personal success, status, and security are increasingly being borrowed, traded, swapped, or simply left on the shelf. Various factors – arguably the most important being an increasingly connected and digitally networked society, regardless of economic development – are causing revolutionary global shifts in behavior. As quickly as a new laptop becomes yesterday’s technology in a brittle plastic shell, or a power tool idly collects dust in the garage, it seems that material possessions are changing from treasure into junk, from security into liability, from freedom into burden, and from personal to communal.”

read more here…

“Sewing machine orchestra is the first version of a performance created by Martin Messier. The basic sounds used in this performance consist entirely of the acoustic noises produced by 8 sewing machines, amplified by means of microcontacts and process by a computer.”

(Via TRIANGULATION BLOG)

Strategies, 2010-2011, by Harm van den Dorpel…

Replaced Mona Lisa is a work by Mike Ruiz:

“The Mona Lisa with the lady selected then put through Content-Aware Fill (a Photoshop CS5 tool that automatically generates content based on the existing surrounding content of the image and fills in selected area). The resulting image is a potential landscape as interpreted by the software. The image was sent to an painting manufacturer in China where an oil painting was produced.”

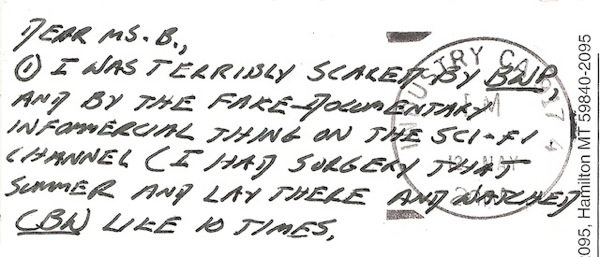

A portion of David Foster Wallace’s personal library now resides at the Ransom Center at the University of Texas at Austin. Maria Bustillos visited the Center and discovered clues to Wallace’s depression scribbled in the margins of several self-help books the writer owned and very carefully read…

One surprise was the number of popular self-help books in the collection, and the care and attention with which he read and reread them. I mean stuff of the best-sellingest, Oprah-level cheesiness and la-la reputation was to be found in Wallace’s library. Along with all the Wittgenstein, Husserl and Borges, he read John Bradshaw, Willard Beecher, Neil Fiore, Andrew Weil, M. Scott Peck and Alice Miller. Carefully.

Much of Wallace’s work has to do with cutting himself back down to size, and in a larger sense, with the idea that cutting oneself back down to size is a good one, for anyone (q.v., the Kenyon College commencement speech, later published as This is Water). I left the Ransom Center wondering whether one of the most valuable parts of Wallace’s legacy might not be in persuading us to put John Bradshaw on the same level with Wittgenstein. And why not; both authors are human beings who set out to be of some use to their fellows. It can be argued, in fact, that getting rid of the whole idea of special gifts, of the exceptional, and of genius, is the most powerful current running through all of Wallace’s work.

(Via kottke.org)

Be Your Own Souvenir project by Blablabla:

“This proposal aims to connect street users, arts and science, linking them to under-laying spaces and their own realities. The installation was enjoyed during two weekends in January 2011 by the tourists, neighbours of La Rambla and citizens of Barcelona, a city that faces a trade-off between identity and gentrification, economic sustainability and economic growth.

This shapes through a technological ritual where the audience is released from established roles in a perspective exchange: spectator-performer, artist-tourist, observer-object.

The user becomes the producer as well as the consumer through a system that invites him/her to perform as a human statue, with a free personal souvenir as a reward: a small figure of him/herself printed three-dimensionally from a volumetric reconstruction of the person generated by the use of three structured light scanners (kinect).

The project mimics the informal artistic context of this popular street, human sculptures and craftsmen, bringing diverse realities and enabling greater empathy between the agents that cohabit in the public space.”

(Via iGNANT)

Art Tape: Live With / Think About«, 2011 by Michael Bell-Smith…

(Via VVORK)

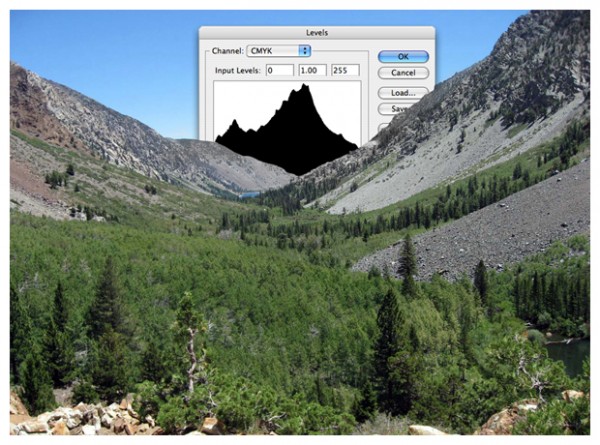

Harvest, interrupted

or, trying to view Breughel in Hi Res with other six applications running

2011

(via)

Cindy Sherman reveals how dressing up in character began as a kind of performance and evolved into her earliest photographic series such as “Bus Riders” (1976), “Untitled Film Stills” (1977-1980), and the untitled rear screen projections (1980)…

Unboxing Videos are a very popular genre on Youtube. And yes, most of the times they’re boring. Not this one…

(Via Nerdcore)

Boy George hosts this documentary on the 1980s New Romantic music and fashion scene. Made in 2001 for Britain’s Channel 4…

(Via Dangerous Minds)

“

Heather Benning’s Dollhouse, 2007, photos on Canadian Art — Breaking and Entering: Haunted Houses…