In his short film Total Pixel Space, filmmaker and musician Jacob Adler employs generative AI to explore the nature of digital images.

Posts Tagged → ai

Macchine Adulatrici (Sycophantic Machine)

You know that flattering, annoying tone of ChatGPT? Marco Cadioli made a video about it. It’s called “Macchine Adulatrici (Sycophantic Machine)”. The audio is composed of all the phrases the model used to “guide” the author during the creation of the video itself. If you ask me, this is definitely the romantic hit of summer 2025.

The Future Is Now Finally Weird AF

Silvia Dal Dosso‘s amazing trilogy is finally complete. And the future surely IS Weird AF.

Brainrot Video Theory

Visualizza questo post su Instagram

I found a website that converts any pdf into a brainrot TikTok style video: memenome.gg. This was originally an academic essay discussing the meaning and usage of the word “art”. I don’t really have any comment to add, except that this is the AI art we need (and probably deserve).

Ana Min Wein? (Where Am I From?)

Ana Min Wein (Where am I From)? is a short movie by Nouf Aljowaysir, where she talks about her origins and identity while in conversation with an AI program. A beautiful meditate on identity, migration, and memory.

[via]

Trisha Code. You can’t stop her

That’s what generative AI should be about.

[via]

I laughed, I cried, It became a part of me

I struggle to find the words to describe this. I’ll just copy-paste one of the comments below the video: “I laughed, I cried, It became a part of me.”

Generative AI is shaping reality

AI generates images of non-existent stuff all the time. But people want stuff, so they order it, and those images turn into reality. Welcome to the era of AI-assisted e-commerce aka AI-shaped reality.

[Having taken orders, Chinese factory must actually make massive AI slop gorilla sofas]

Virtual creatures evolving

I’m literally mesmerized by Karl Sims‘ digital creatures.

500,000 JPGs and no plans

Video and music by Eryk Salvaggio, inspired by a Reddit post.

I’ve got 20,000 jpgs and no plans

I’ve 30,000 jpgs and no ideas

for what to do with them

I’ve got 40,000 jpgs on a hard drive

what do you guys do with all these pictures

The First Artificial Intelligence Coloring Book

Harold Cohen, Becky Cohen, Penny Nii, The First Artificial Intelligence Coloring Book, 1984. Read the foreword here.

The Quasi Robots

In 2008 Nicolas Anatol Baginski made the “Quasi Robots” a a family of autonomous, disabled machines that are design to provoke emotional response. Probably the weirdest piece of robotic/ai art I’ve ever encountered.

Found in Translation

Eric Drass, aka Shardcore, made this very interesting experiment with generative AI applications: “I arranged a form of Chinese-Whispers between AI systems. I first extracted the keyframes from a scene from American Psycho and asked a multimodal LLM (LLaVA) to describe what it saw. I then took these descriptions and used them as prompts for a Stable Diffusion image generator. Finally I passed these images on to Stable-Video-Diffusion to turn the stills into motion.”

Voice In My Head

Kyle McDonald & Lauren Lee McCarthy developed an AI system that can replace your internal monologue:

“With the proliferation of generated content, AI now seeps constantly into our consciousness. What happens when it begins to intervene directly into your thoughts? Where the people you interact with, the things you do, are guided by an AI enhanced voice that speaks to you the way you’d like to be spoken to.”

One World Moments

“One World Moments is a new experiment in ambient media, which seeks to use the new possibilities enabled by AI image generation to create more specific, evocative, and artistic ambient visuals than have been previously possible on a mass scale.”

[via]

Entropophone

In the work “Entropophone | La qualité de l’air” by artist Filipe Vilas-Boas, the anonymous video stream of a surveillance camera is transformed into a musical score.

[via]

The Wizard of AI

Alan Warburton did it again.

“The Wizard of AI,’ a 20-minute video essay about the cultural impacts of generative AI. It was produced over three weeks at the end of October 2023, one year after the release of the infamous Midjourney v4, which the artist treats as “gamechanger” for visual cultures and creative economies. According to the artist, the video itself is ‘99% AI’ and was produced using generative AI tools like Midjourney, Stable Diffusion, Runway and Pika. Yet the artist is careful to temper the hype of these new tools, or as he says, to give in to the ‘wonder-panic’ brought about by generative AI. Using creative workflows unthinkable before October 2023, he takes us on a colourful journey behind the curtain of AI – through Oz, pink slime, Kanye’s ‘Futch’ and a deep sea dredge – to explain and critique the legal, aesthetic and ethical problems engendered by AI-automated platforms. Most importantly, he focusses on the real impacts this disruptive wave of technology continues to have on artists and designers around the world.”

Something in the suburbs

Literally No Place

Hello baby dolls, it’s the final boss of vocal fry here. Daniel Felstead’s glossy Julia Fox avatar is back. Last time she took on Zuckerberg’s Metaverse. Now she takes us on a journey into the AI utopian versus AI doomer cyberwarfare bedlam, exploring the stakes, fears, and hopes of all sides. Will AI bring about the post-scarcity society that Marx envisioned, allowing us all to live in labor-less luxury, or will it quite literally extinguish the human race?

Literally No Place, brand new video(art) essay by Daniel Felstead & Jenn Leung

Lore Island at the end of the internet

For the final chapter of Shumon Basar’s Lorecore Trilogy (read the first part here, and the second here), the curator collaborated with Y7, a duo based in Salford, England, who specialize in theory and audiovisual work. Here is the result.

Here, according to a neologism from “The Lexicon of Lorecore,” the zeitgeist is taken over by “Deepfake Surrender”—“to accept that soon, everyone or everything one sees on a screen will most likely have been generated or augmented by AI to look and sound more real than reality ever did.” Y7 and I also agreed that, so far, most material outputted from generative AI apps (ChatGPT, DALL-E, Midjourney) is decidedly mid. But, does it have to be?

River: a visual connection engine

Max Bittker made a CLIP-based image browser, similar to same.energy. I could explore this thing forever.

[via]

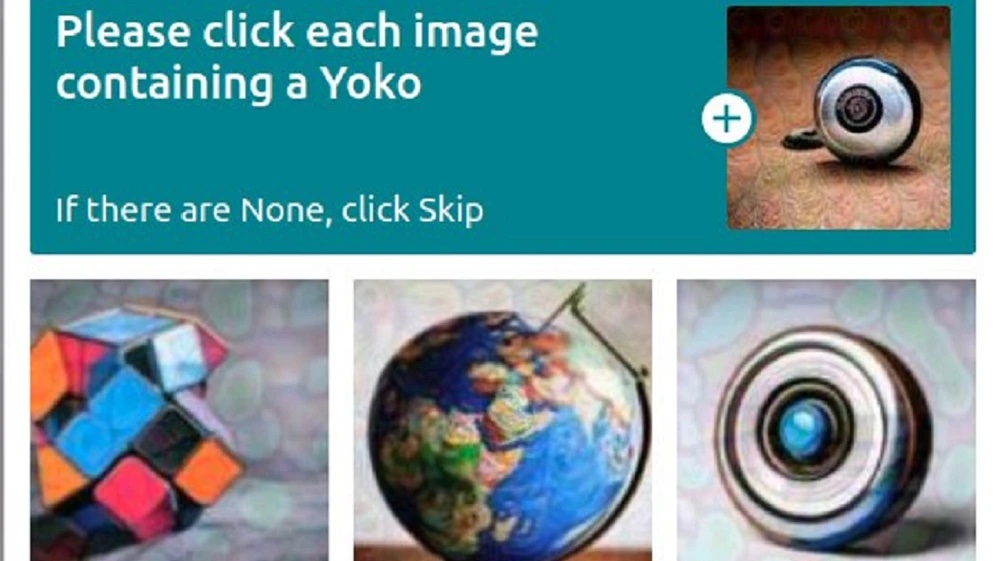

AI, we have a problem

Discord’s captcha asked users to identify a ‘Yoko,’ a snail-like object that does not exist and was created by AI.

[via]

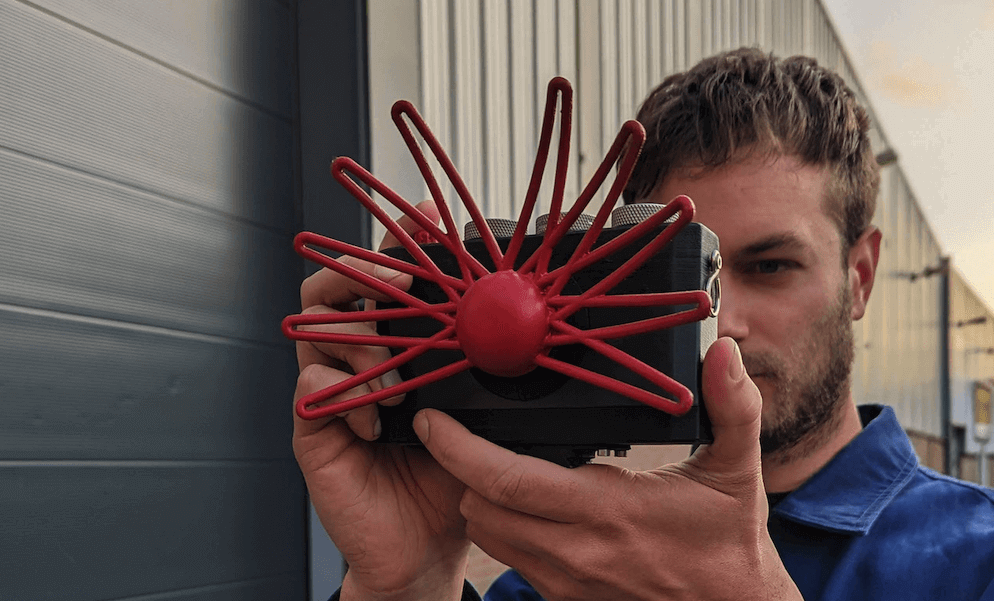

Blind Cameras

I just came across Paragraphica, an interesting project by Bjørn Karmann. It is a camera that uses location data and AI to visualize a “photo” of a specific place and moment. The viewfinder displays a real-time description of your current location, and by pressing the trigger, the camera will create a photographic representation of that description.

I just came across Paragraphica, an interesting project by Bjørn Karmann. It is a camera that uses location data and AI to visualize a “photo” of a specific place and moment. The viewfinder displays a real-time description of your current location, and by pressing the trigger, the camera will create a photographic representation of that description.

It reminded me of two similar new media art projects from past, that I also displayed in a couple of exhibitions I curated (in 2010 and 2012).

The first one is Blinks & Buttons by Sascha Pohflepp, a camera that has no lens. It tracks the exact time that the button is pushed, and then goes out and searches for another image taken at that exact time. Once the camera finds one, it displays the image in the LCD located on the back.

The second one is Matt Richardson‘s Descriptive Camera, a device that only outputs the metadata about the content and not the content itself.

update 29/04/24: Kelin Carolyn Zhang and Ryan Mather designed the Poetry Camera, an open source technology that generates a poem based on a photo.

Confusing Bots

Confuse A Bot is an upcoming in-browser video game where all you have to do is convince the robots that literally everything is cheese. Here’s how creator Rajeev Basu describes the game:

“AI is only as good as its datasets. CONFUSE A BOT is a ‘public service videogame’ that invites players to verify images incorrectly, to confuse bots, and help save humanity from an AI apocalypse. While key figures in AI like Sam Altman have sounded the alarm many times, there has been little action beyond “lively debates” and petitions signed by high-ranking CEOs. Confuse A Bot questions: what if we put the power back into the hands of the people?

How the game works:

– The game pulls in images from the Internet, and asks players to verify them.

– Players verify images incorrectly. The more they do, the more points they get.

– The game automatically re-releases the incorrectly verified images online, for AI to scrape and absorb, thereby helping save humanity from an AI takeover. It’s that easy!”

[via]

Flowers Blooming Backward Into Noise

A short animated documentary by Eryk Salvaggio on how AI image generation works, and the entanglement of composite photography, statistical correlations, and racist ideologies.